Striking at CrowdStrike

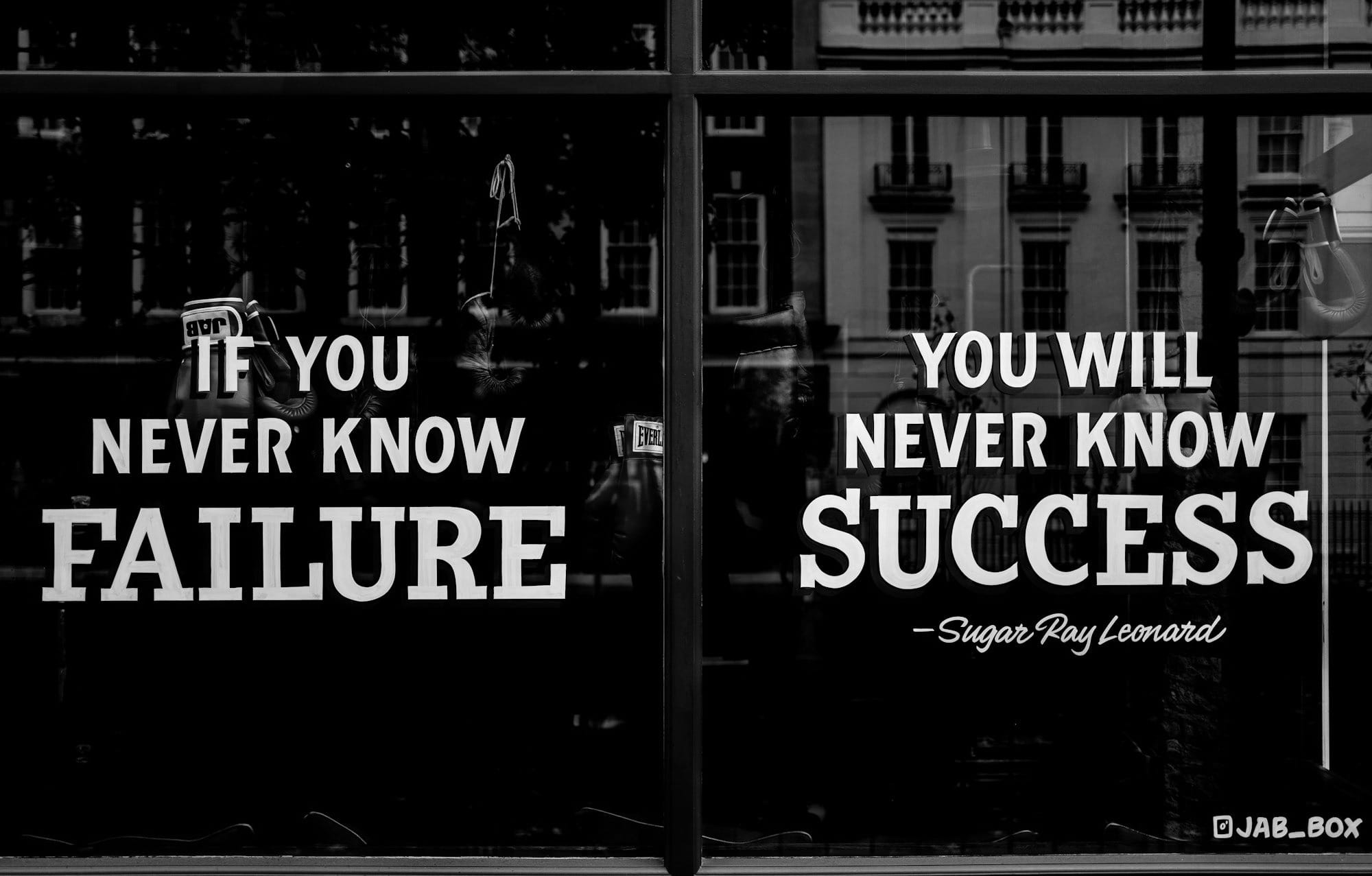

Let's learn from failure.

On Friday (19 July 2024) an update by CrowdStrike (a major cybersecurity player) took down a large percentage of computers around the planet, causing mayhem and affecting the operations of countless companies.

What can we learn from this incident?

Are there lessons here for both IT and Business?

What does CrowdStrike say?

At the time of writing this, the latest update from CrowdStrike, inter alia, stated the following:

"... released a sensor configuration update ... resulting in a system crash ..."

I.e., we pushed some new 'stuff' into the world - and it failed.

And ...

"We ... are doing a thorough root cause analysis ... identifying any ... workflow improvements that we can make to strengthen our process."

I.e. we will figure out:

(1) why it failed, and

(2) if we can 'push stuff' better.

Pundits?

There are already many 'experts' weighing in, and this BusinessInsider article is a good example. It's based on a conversation with one "Ahmed Al Sharif, 32, the CTO of Sandsoft, a game developer".

His conclusion (assuming that the reporter got it right) was:

I was surprised to learn ... an update ... pushed early Friday morning ... One of the cardinal rules of software development is that you typically don't want to push a fix later in the week or on a Friday. You'll have less support trying to fix any issues and the weekend is gone.

Our response?

Nonsense!

Absolute nonsense.

Counter-productive nonsense, in fact.

Why our Reaction?

These 'conventional wisdom' rules either never had any basis in reality, or reality has since changed.

"... Less support ... on a Friday"?

Really?

A globally important 365x24 company such as CrowdStrike?

They pack up early on a Friday?

In 2024?

There was some truth in Al Sharif's assertion in the 1900s. Yet, whatever truth there was has since been diluted to insignificance.

Where such a truth still remains, it remains in areas where large 'big bang' deployments still cannot be avoided (as was the case in the 1900s).

Big bang necessities usually now only exist where monolithic packaged solutions are still used; where the concept of a 'version' of the whole solution - as opposed to many different versions of a many very(!) small parts of a solution - is the case.

The correct answers?

Let's now be presumptuous and tell a multi-billion dollar internationally renowned company how to do things. Yet, we can confidently give this 'lecture' based on (1) the fact that we did not come up with these answers - other much smarter people did - and (2) these better approaches have also been proven to work better.

The answers are simple:

(A) Don't do big-bang deployments. Always deploy the smallest possible sets of changes. Rather do 500 small deployments one after the other, than one deployment with 500 changes inside of it.

(B) Deploy often and deploy continuously. Deploy many times a day. Deploy when the update is ready.

(C) Deploy new functionality long before it gets switched on. If you are building something big, then deploy as much of that as early as possible. Then switch it off and on with feature-flags, kill-switches, etc.

(D) Do 'canary deployments' (i.e. deploy gradually / to small subsets of customers at a time / not everywhere all at once). So, CrowdStrike, please deploy first to 'Nicaragua' and 'Malawi' only. Then, incrementally, wider.

If any of these approaches are not possible in your environment, then you (including CrowdStrike) must be very hard on yourself.

Why the hell isn't it possible?

Make it possible!

Why did we say 'counter productive'?

These types of stuff-ups make it much more difficult for 'modern' teams using Progressive Deployment strategies to be successful in corporate environments.

Such stuff-ups (and the incorrect analyses thereof!) strengthen corporate (3rd Industrial Revolution) 'apparatchiks' with their (1900s) month-end / year-end / whatever-reason code freezes and their never-on-a-Friday nonsense - as well as their cargo-cult change-control meetings. It increases risk and delays progress while trying to decrease risk.

By Monday morning (22 July 2024 - following this fateful Friday), many IT people in many corporate environments will, yet again, be reminded of all the wrong lessons. Ja boet. Ja swaer.

Business?

The best approach for business (in 2024 and beyond) is exactly the same as the best approach for IT.

Continuous bottom-up, incremental, tinkering, pivoting, approaches - with evidence-based learning every step of the way - is the best way to build almost anything these days.

Yes, there are exceptions such as building football stadiums. Very few of us are in such businesses.

Lastly

There have been many interesting (other types of) angles on this matter. Here is one of them.